Why Do Robots Need 3D Vision Systems for Enhanced Navigation and Interaction?

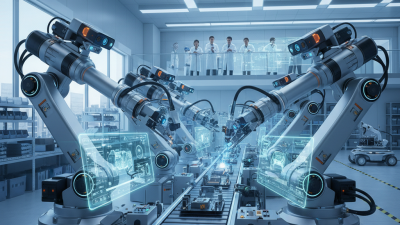

In today's rapidly advancing technological landscape, robots are becoming increasingly integral to various sectors. The use of 3D vision systems for robots is crucial for their navigation and interaction capabilities. These systems provide a depth perspective, allowing robots to understand their environment better.

Imagine a robot navigating through a crowded space. It must avoid obstacles, recognize objects, and interact with humans. Traditional 2D sensors often fall short in dynamic settings. They may misinterpret distances or fail to detect nearby obstacles. This highlights a glaring gap in robotic technology.

3D vision systems empower robots to overcome these limitations. They help robots perform complex tasks, such as picking up fragile items or recognizing faces. However, there are imperfections. Not every 3D vision system can interpret all scenarios flawlessly. Robots can misjudge depth or struggle with lighting variations. This ongoing challenge demands further refinement and innovation.

Benefits of 3D Vision Systems in Robotic Navigation

3D vision systems are essential for modern robots. They allow robots to perceive their environment in three dimensions. This capability enhances navigation and interaction significantly. Robots equipped with 3D vision can detect obstacles more accurately. They can navigate complex spaces, like warehouses and homes, with ease. Depth perception is crucial when avoiding collisions. It helps prevent accidents and improves overall safety.

Additionally, 3D vision aids in object recognition. Robots can identify and classify objects better using this technology. They can grasp items more effectively, which is critical in tasks like sorting or delivering. However, implementing 3D vision can be challenging. Algorithms must process vast amounts of data quickly. The technology can be expensive, too. There might be limitations in certain environments, like bright sunlight or low-light conditions, that need attention.

While the benefits are clear, there are still areas for improvement. Calibration of 3D vision systems can be tricky. Small errors in data interpretation can lead to significant issues. It’s essential to continuously refine these systems for better performance. Overall, the potential of 3D vision systems in robotics is immense, but there are hurdles to overcome.

The Role of Depth Perception in Robot Interaction

Depth perception is critical for robots. It allows them to understand their surroundings in three dimensions. This capability enhances their interaction with objects and humans. Robots equipped with 3D vision can identify distances. They can navigate complex environments more effectively. But achieving accurate depth perception is challenging.

Not every robot achieves perfect depth perception. Some systems struggle in certain lighting conditions or when objects overlap. Such limitations affect their ability to interact smoothly. For instance, a robot may misjudge the distance to a person. This can lead to awkward movements or unintentional collisions.

The need for improvement is clear. Researchers are exploring better sensors and algorithms. Advances in artificial intelligence may also help. However, the journey to flawless interaction remains a work in progress. Each step taken teaches valuable lessons for future designs.

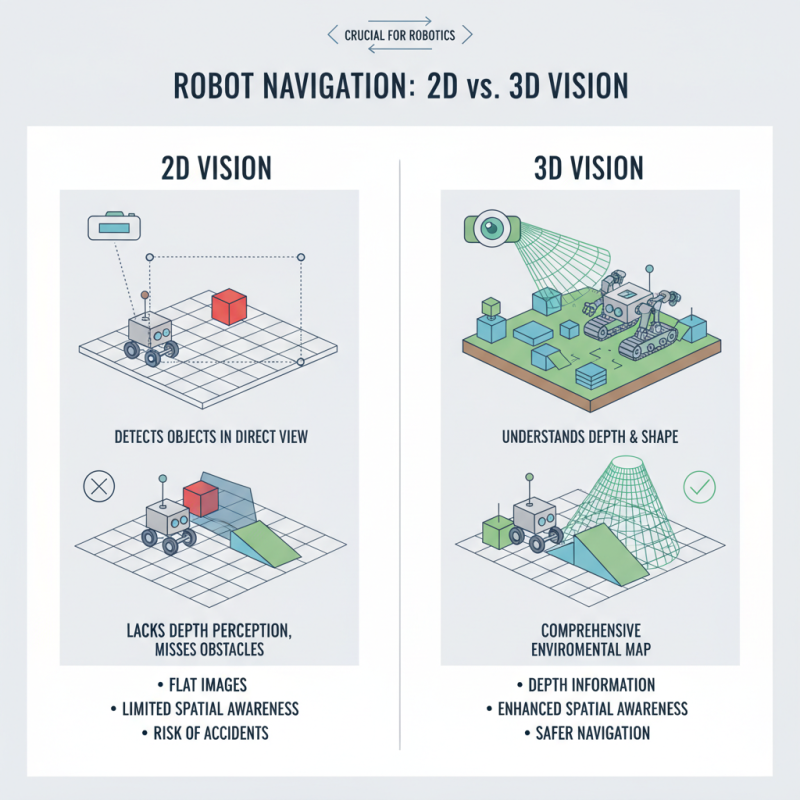

Comparison of 2D vs 3D Vision Technologies for Robots

The choice between 2D and 3D vision technologies is crucial for robot navigation. 2D systems capture flat images, making it hard to understand depth. Robots using 2D vision can recognize objects but struggle with spatial awareness. They may miss obstacles that don’t appear in their direct line of sight. This limitation can lead to accidents.

3D vision systems, on the other hand, offer depth perception. They create a more comprehensive view of the environment. Robots equipped with 3D sensors can navigate complex spaces more efficiently. They can distinguish between objects at varying distances, enhancing their interaction in complex scenarios. Yet, implementing 3D vision is not flawless. It often requires more computational power and can be sensitive to lighting conditions.

While 2D systems are simpler, they may not meet the demands of advanced robotics. In contrast, 3D vision allows for better obstacle detection. However, it also brings new challenges. Developers must continuously refine algorithms to improve accuracy and reliability. This ongoing quest for better technology highlights the need for innovation in robotics.

Real-World Applications of 3D Vision in Robotics

3D vision systems are vital for robots. They help robots perceive their surroundings in a detailed way. This technology enhances navigation and interaction, enabling robots to understand complex environments. For example, a delivery robot navigates through busy sidewalks by recognizing obstacles. This ability transforms ordinary navigation into a dynamic process.

Application in healthcare is also promising. Surgical robots use 3D vision to analyze patients’ anatomy. They can perform intricate tasks with precision. However, imperfect vision can lead to errors. These robots need constant adjustments and improvements. Continuous testing is crucial for success in real procedures.

Tips: Always account for various lighting conditions in your designs. This can affect how robots perceive depth. Regularly evaluate the effectiveness of vision algorithms. Ensure they adapt to changing environments to enhance navigation. Finally, don’t overlook user feedback. It’s valuable for refining functionality.

3D Vision Systems in Robotics: Applications and Benefits

The bar chart above illustrates the importance levels of various applications of 3D vision systems in robotics on a scale from 1 to 10. Autonomous navigation and obstacle detection are highlighted as critical applications, showcasing the necessity of advanced vision systems for robots in real-world scenarios.

Challenges in Implementing 3D Vision Systems for Robots

Implementing 3D vision systems in robots presents significant challenges. The technology relies on complex algorithms and powerful hardware. These systems need precise sensors to interpret their surroundings. Unfortunately, sensor accuracy can vary, affecting performance. For instance, a report by the International Journal of Advanced Robotics states that 30% of robot failures are linked to visual perception issues.

Environmental factors add to the complexity. Lighting conditions, surface textures, and obstacles can confuse 3D vision systems. Robots may misinterpret an object’s shape or distance. A study found that even slight changes in lighting can lead to up to 25% reduction in accuracy. Such inconsistencies in interpretation hinder reliable navigation and interaction.

Moreover, integration with existing systems can be problematic. Many robots already have established navigation frameworks. Introducing 3D vision can require significant reprogramming. It's crucial for developers to allocate ample time for testing. An assessment by the Robotics Innovation Lab highlighted that about 40% of projects face delays due to integration issues. These hurdles remind the industry that refining 3D vision systems is a continual process.

Related Posts

-

Top 10 Amazing Innovations in Robot Vision Technology You Need to Know

-

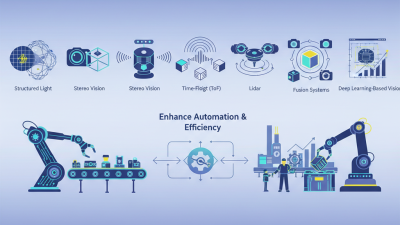

7 Best 3D Vision Systems for Robots: Enhance Automation & Efficiency

-

Exploring the Future of Robotic Laser Welding in Advanced Manufacturing Techniques

-

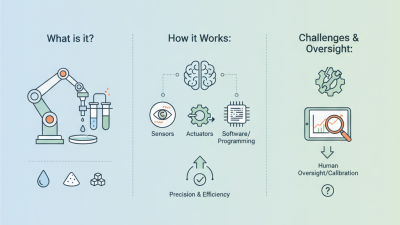

What is a dispensing robot and how does it work?

-

How to Leverage Robotic Vision for Enhanced Automation in 2025

-

How to Master Robotic Arc Welding: Tips, Techniques, and Best Practices